Last week a potential customer asks for using Spark R on DataStax Enterprise and I had no clue if this could work. I decided to test the general possibility in my lab environment. After starting up my Cassandra and DSE Analytics Cluster I had to install a few things before I could really start using the Spark R shell. First thing was to have the right R version (> 3.x) on the DSE Analytics node:

http://stackoverflow.com/questions/16093331/how-to-install-r-version-3-0

With the right R version I was able to start the R shell and install the different components (devtools, SparkR etc.) like described here:

http://amplab-extras.github.io/SparkR-pkg/

First you have to install rJava and then you can install devtools within the R shell (just enter R in the shell and submit):

apt-get install r-cran-rjava

install.packages(“devtools”, dependencies = TRUE)

I had to fix some lib curl issues as well but they looked like they were Ubuntu related… Just fix that using apt-get if this hits you as well.

sudo apt-get -y build-dep libcurl4-gnutls-dev

sudo apt-get -y install libcurl4-gnutls-dev

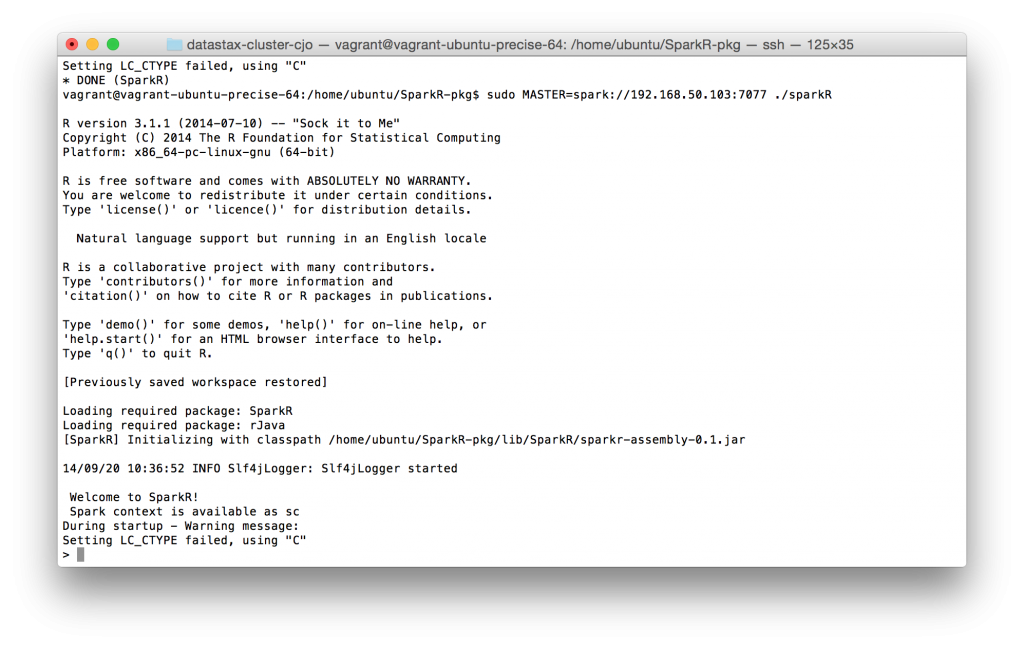

I also downloaded the binaries and saved them in my home directory because I was not able to point to the Spark node inside the R shell. After cloning and installing SparkR I was able to connect the SparkR shell to my DSE Analytics node with:

sudo MASTER=spark://192.168.50.103:7077 ./sparkR

The Spark Context was now available as sc in the R shell.

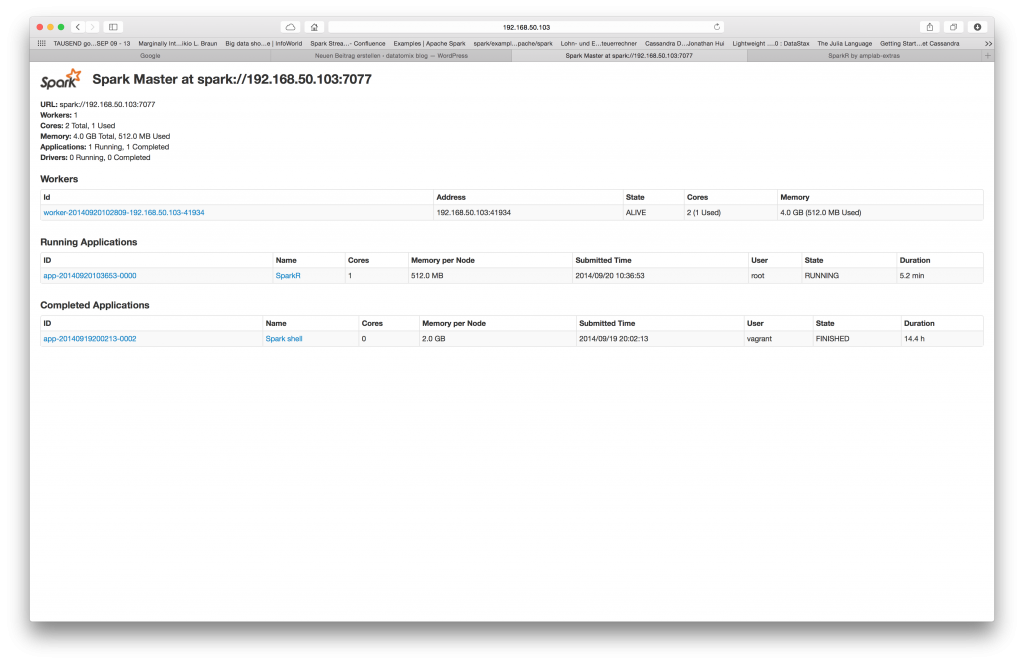

The SparkR process is registered on the SparkMaster as well.

Seems to work, but I have no idea how to use R now and if there is a cassandraTable/RDD available 🙂