Yesterday I had the time to run my favourite example of MapReduce on my twitter data. And guess what, it´s WordCount 🙂 To be honest, this one is available in Hadoop, Spark and SolR and that´s the main reason for this decision. As you might remember there is a “text” field in my twitter data model from the first part. This is the field to use for WordCount,

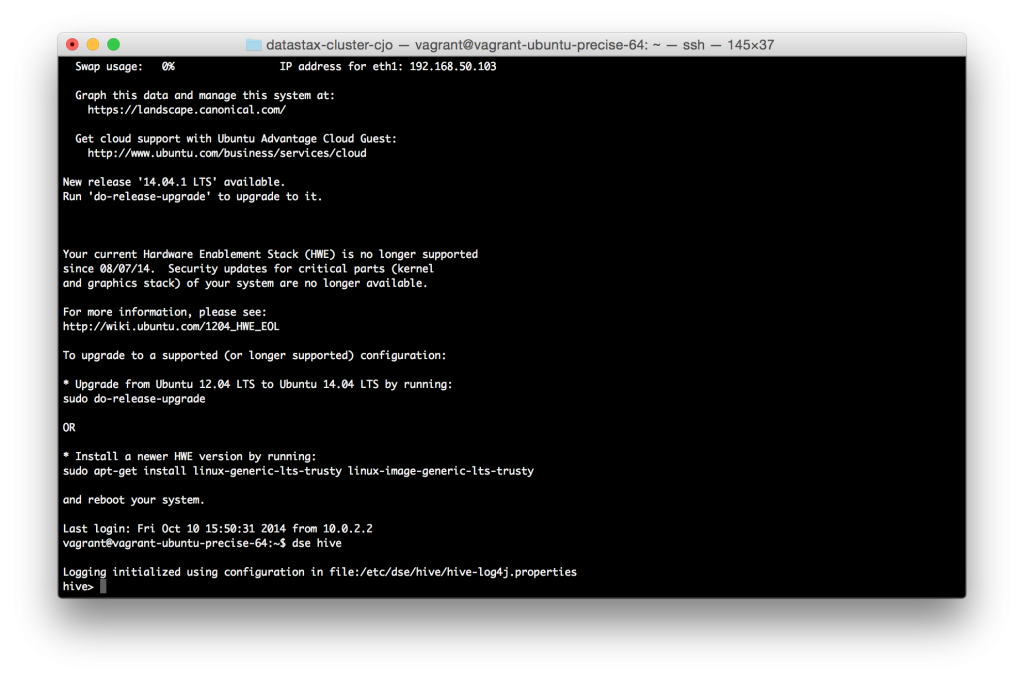

After I changed on node to be an hadoop node (/etc/default/dse) and changed the replication factor (to replicate the data) I was able to start “dse hive”.

HIVE is the easiest way to access the data via hadoop and without manual extracting or copying them to CFS. After HIVE was started I issued the WordCount query:

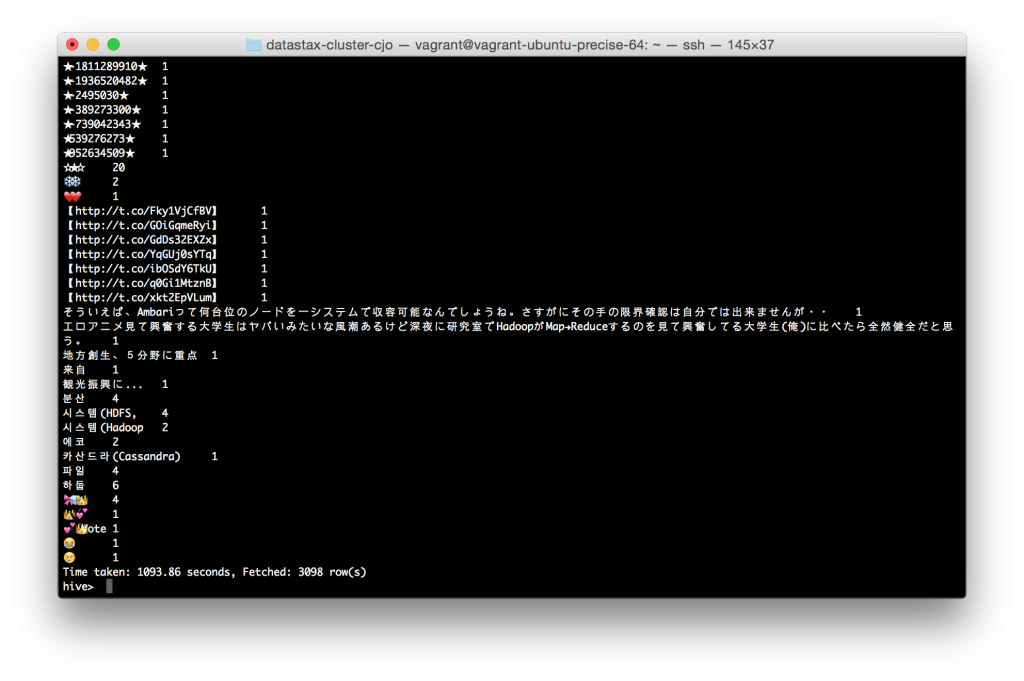

SELECT word, COUNT(*) FROM twittercan.tweets LATERAL VIEW explode(split(text, ‘ ‘)) lTable as word GROUP BY word;

You can determine the actual state in the OpsCenter as well. As you can see, the WordCount took around 20 Minutes to count the actual dataset.

This was the fairly easiest way to do a WordCount on twitter tweets 🙂